We take a deep dive into the increasingly important role of AI in algo execution with the head of quantitative strategies and data group for EMEA equities execution at BofA Securities.

We take a deep dive into the increasingly important role of AI in algo execution with the head of quantitative strategies and data group for EMEA equities execution at BofA Securities.

DeWitt currently looks after research and development of equities execution algorithms in EMEA, and has 20 years’ experience designing and building execution algorithms between the EMEA and APAC regions. Before joining BofA Securities in 2019, he led the statistical modelling and development group for EMEA and APAC at Barclays, also involved in building execution algorithms.

What do you find exciting about the present direction of algorithmic execution?

Algorithmic execution has remained relatively static on the surface. The algorithm names, objectives and benchmarks have not changed much in 20 years. Under the surface however, there is much evolution. Two, which I find extremely interesting, are the increasing role of artificial intelligence in execution and the innovation in price formation and market structure and of course, the combination! Let’s talk about AI here.

How do you see artificial intelligence being adapted to algorithmic execution?

AI is a broad term covering a wide array of techniques; on one extreme you have the simple univariate linear regression whilst at the other end of the complexity spectrum there are 100+ billion parameter generative AI models powering the likes of ChatGPT, Claude and Gemini. Various forms of machine learning have been used over the last two decades as a means of improving existing statistical methods and providing innovation within algorithmic trading. Some examples of these have produced more accurate predictive modelling of volume, volatility, dark liquidity, and other intraday time series to inform algorithmic trading decisions. Unsupervised learning techniques such as dimensional reduction methods like PCA, K-means and t-SNE have been used to automate algo selection and make suggestions for improving execution choices in transaction cost analysis.

Here we are referring to deep learning and reinforcement learning combined in generative AI. Let’s break these topics into two categories, structured data, (e.g. numerical, categorical) and unstructured data, (e.g. text, video, etc).

Structured data

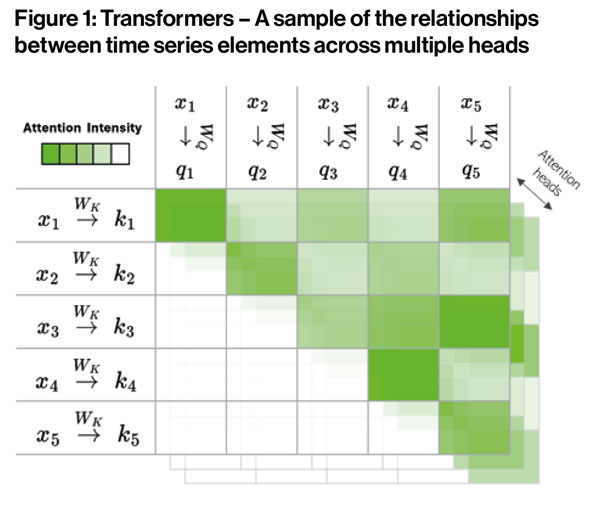

Recent advances in deep learning related to generative AI are showing promise with predictive power on time series data. Numerous papers have been published (see references) from highly credible researchers indicating the potential of the transformer architecture, which is the backbone of the likes of ChatGPT, Claude and Gemini. This neural network architecture is designed to pay attention and understand streams of information and hold context from point to point in any permutation. This is what empowers transformers to understand language so well. The architecture is also able to pay attention to multiple streams of sequences to be read in parallel and then tied together across multiple heads for a broader understanding (Figure 1). An example would be, looking across the prices of multiple assets in unison and learning the non-linear relationships which may provide predictive power for the direction of the asset in question.

Recent advances in deep learning related to generative AI are showing promise with predictive power on time series data. Numerous papers have been published (see references) from highly credible researchers indicating the potential of the transformer architecture, which is the backbone of the likes of ChatGPT, Claude and Gemini. This neural network architecture is designed to pay attention and understand streams of information and hold context from point to point in any permutation. This is what empowers transformers to understand language so well. The architecture is also able to pay attention to multiple streams of sequences to be read in parallel and then tied together across multiple heads for a broader understanding (Figure 1). An example would be, looking across the prices of multiple assets in unison and learning the non-linear relationships which may provide predictive power for the direction of the asset in question.

Role in algorithmic trading

We have been exploring the transformer architecture within our Foresight product. Foresight aims to improve execution by learning our client and trader’s alpha from prior executions, combining that with a market move forecast and the order’s expected impact. The combination of these factors helps us improve the parameterisation of the algorithm to give a better execution. 18 months of research and a year of experimentation and tuning are now resulting in very encouraging improvements in performance.

For our market move forecast model, we use a “long short-term memory” (LSTM) deep learning architecture which has given us, on average, better than random results across our entire order population this year. Based on recent publications, we are researching the use of transformers as a replacement for the LSTM model. Our offline, out of sample results indicate promising improvements in predictive power. To take advantage of the strength of the architecture we also look at new ways of grouping feature sets across multiple heads to further improve the outcomes. It is clear this is only the beginning and there is much more we can do in this space, and it is exciting to see how the direction evolves. I have included several references to interesting papers related to this area below.

Unstructured data

Natural language processing has been used for over a decade for sentiment analysis of news, corporate actions, and any other fast processing of financial documents for the possibility of trading edge. Recent advances in the Large Language Models and their seeming ability to link together facts and even suggest ideas open a new dimension of possibilities of applications in trading. The most obvious areas which this technology will affect are the technology and operational sides of trading. Speeding up and possibly democratising coding and research as well as automating areas which were previously routine for humans to perform but difficult for machines to replicate due to language nuances. There is also a fundamental knowledge distillation not previously possible. For example, the summarisation across thousands of meetings in different locations and languages for key themes and/or insights not previously easy to achieve, which can lead to new previously unconsidered avenues of information and trends. Unstructured data has become significantly more powerful than it was five years ago and larger companies who figure out to leverage their vast amounts of it could be at a strong advantage.

Combined

Things get extremely interesting when structured data and unstructured data models meet. Toolformers (2023), a paper from Meta AI Research by Schick, et. al., refers to the idea that LLMs can learn to call other APIs to utilise the “brain” of another model, or drive the behaviour of another architecture. As a toy example, it has been observed that LLMs are not good at basic math, but the LLM can learn to call a calculator API or Wolfram’s Mathematica for more advanced solutions. ChatGPT 4.0 does this already, calling Python for solutions or sourcing web pages for additional information. Simultaneously, an LLM can learn complex task planning and integrating various data modalities for the mechanics of a physical device such as a robotic arm and explain the actions via its inner monologue.

The combination opens many opportunities for collating together multiple modalities not previously considered, market data aggregations, text information such a real time chat scrapes, and downstream model outputs into signals for triggering trading decisions. For example, looking for blocks across chats, calling a cost model, calling an optimiser, scanning open algorithms for liquidity, and pricing the block back into a chat for immediate actionable execution directly. I am most curious how will the readers use this technology and it’s more advanced generations surely not far away…

Related Research:

- Barez, F., Bilokon, P., Gervais, A., & Lisitsyn, N. (2023). Exploring the advantages of transformers for high-frequency trading. arXiv preprint arXiv:2302.13850. [2302.13850] Exploring the Advantages of Transformers for High-Frequency Trading (arxiv.org)

- Bilokon, P., & Qiu, Y. (2023). Transformers versus LSTMs for electronic trading. arXiv preprint arXiv:2309.11400. arxiv.org/pdf/2309.11400

- Kolm, P. N., & Westray, N. (2024). Improving Deep Learning of Alpha Term Structures from the Order Book. Available at SSRN. Improving Deep Learning of Alpha Term Structures from the Order Book by Petter N. Kolm, Nicholas Westray :: SSRN

- Königstein, N. (2023). Dynamic and context-dependent stock price prediction using attention modules and news sentiment. Digital Finance, 5(3), 449-481. 2205.01639 (arxiv.org)

- Lim, B., & Zohren, S. (2021). Time-series forecasting with deep learning: a survey. Philosophical Transactions of the Royal Society A, 379(2194), 20200209. Time-series forecasting with deep learning: a survey | Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences (royalsocietypublishing.org)

- Schick, et. al (2023). Toolformer: Language models can teach themselves to use tools. CoRR, abs/2302.04761 (arxiv.org)

- ©Markets Media Europe 2024

We take a deep dive into the increasingly important role of AI in algo execution with the head of quantitative strategies and data group for EMEA equities execution at BofA Securities.

We take a deep dive into the increasingly important role of AI in algo execution with the head of quantitative strategies and data group for EMEA equities execution at BofA Securities.